Heavy CPU usage in Reaper with realtime privileges

RT priorities work in mysterious ways

Context

I've been using Linux for audio production for the past 7 years right now. Despite the lack of instrument and effects plugins, things work great.

Well, except for this another thing.

I get dreadful performance if I add my user to the audio group. This enables user-level processes to run with real-time priorities. The privileges are defined in /etc/security/limits.conf:

@audio - rtprio 95

@audio - memlock unlimited Well that's nice to know, but what about my performance issue? If I just leave my user out of the audio group, I get a relatively smooth UI in Reaper, but whenever I go record something, the audio buffering just doesn't keep up, I get crackles on even moderately demanding instrument and effects chains.

In addition to being incredibly annoying in sound, the pops and crackles indicate that the audio buffer was not filled in time, forcing the playback to play an unfinished buffer. And unfinished buffer means some random, corrupt data which for sure won't sound good. And thus the pops and crackles.

So what to do?

Once RT privileges have been enabled, my CPU usages spikes through the roof. That's our first, simple clue.

Sorry to interrupt you for a moment

I'm fortunate enough to have a reasonably powerful laptop with a similar setup. I check there and my user does belong to the audio group. CPU priorities also look good, so does overall performance.

Also, I'm using an external USB soundcard on my desktop, which always creates slightly more latency than a laptop's integrated soundcard located directly in the PCI bus.

Maybe let's look at /proc/interrupts? Any interrupts overlapping too much on one CPU would for sure cause performance issues. Let's keep an eye on that:

watch -n 0.1 cat /proc/interruptsHere we can see the amount of interrupts done per CPU core and the source of the interrupt.

My CPU has 16 logical cores, each column here (CPU0 to CPU15) corresponds to one of them. In the right hand side of each row, the cause of the interrupt is listed.

Every 0.1s: cat /proc/interrupts evo: Fri Sep 6 07:17:34 2024

CPU0 CPU1 CPU2 CPU3 CPU4 CPU5 CPU6 CPU7 CPU8 CPU9 CPU10 CPU11 CPU12 CPU13 CPU14 CPU15

67: 248836593 0 0 0 0 11855265 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:03:00.0 0-edge xhci_hcd

75: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:22:00.0 0-edge xhci_hcd

84: 1843 0 0 0 0 74 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.2 0-edge xhci_hcd

93: 98317 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:26:00.3 0-edge xhci_hcd

101: 78365364 0 0 0 0 6339503 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.0 0-edge amdgpu

103: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSIX-0000:26:00.2 0-edge psp-1

104: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSIX-0000:26:00.2 1-edge ccp-1

105: 965 21803564 0 0 0 0 0 0 0 0 0 36720 0 0 0 0 IR-PCI-MSIX-0000:1e:00.0 0-edge enp30s0

107: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3021 IR-PCI-MSIX-0000:01:00.0 16-edge nvme0q16

109: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.1 0-edge snd_hda_intel:card0

NMI: 80 11597 11992 11641 12004 11706 12020 11705 11969 11660 11964 11683 11951 11645 11974 11687 Non-maskable interrupts

LOC: 1512622651 264903049 302009566 280517545 292627610 235121865 405351050 237000289 295252539 239082321 304910590 252729889 319355274 296553762 290293745 233191224 Local timer interrupts

SPU: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Spurious interrupts

PMI: 80 11597 11992 11641 12004 11706 12020 11705 11969 11660 11964 11683 11951 11645 11974 11687 Performance monitoring interrupts

IWI: 275 385 9 5 249 12 22 230 19 5 9 88 12 11 13 272 IRQ work interrupts

RTR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 APIC ICR read retries

RES: 3758737 5312168 3792978 3273848 3851267 3382458 3971552 3438542 3144835 2680993 3172715 2759162 3262214 2862473 3398232 2956462 Rescheduling interrupts

CAL: 297846172 315893124 334316813 333297576 335472530 331803379 338329861 339236161 336837902 342289427 337166079 337278432 346701463 347494507 341664458 354906342 Function call interrupts

TLB: 17316479 16700260 17279616 16731805 17692213 17111019 17990114 17370089 18479647 18028922 17735578 17514137 17826721 17314256 18052571 17747879 TLB shootdowns

TRM: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Threshold APIC interrupts

DFR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Deferred Error APIC interrupts

MCE: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Machine check exceptions

MCP: 879 853 853 852 852 852 853 853 853 853 854 855 855 855 855 855 Machine check pollsOne of the highest sources is amdgpu. Well, that's my GPU, so that's expected.

The soundcard on my PC is connected via a USB 3.0 port, so the source of interrupts for it is xhci_hcd . The interrupts caused by it are located in CPU0 and CPU5. So it's not spread really evenly. Even worse, interrupts by amdgpu are also mostly located in CPU0, so any audio handling done there is contested by handling graphcis calls.

Let's setup irqbalance to the background and take a look at the results again:

67: 249945864 0 0 0 0 11855265 445927 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:03:00.0 0-edge xhci_hcd

75: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:22:00.0 0-edge xhci_hcd

84: 1843 0 0 0 0 74 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.2 0-edge xhci_hcd

93: 98317 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:26:00.3 0-edge xhci_hcd

101: 78779206 0 0 0 0 6339503 0 0 0 124678 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.0 0-edge amdgpu

103: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSIX-0000:26:00.2 0-edge psp-1

104: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSIX-0000:26:00.2 1-edge ccp-1

105: 965 21832732 0 0 0 0 0 0 0 0 0 38233 0 0 0 0 IR-PCI-MSIX-0000:1e:00.0 0-edge enp30s0

107: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3809 IR-PCI-MSIX-0000:01:00.0 16-edge nvme0q16

109: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 IR-PCI-MSI-0000:25:00.1 0-edge snd_hda_intel:card0

NMI: 80 11710 12107 11753 12119 11820 12135 11819 12084 11774 12078 11796 12065 11758 12089 11801 Non-maskable interrupts

LOC: 1523436646 266333753 303759324 281958156 294399628 236534259 407098297 238387095 296571707 240250179 306241263 253919155 320697239 297746898 291626477 234377989 Local timer interrupts

SPU: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Spurious interrupts

PMI: 80 11710 12107 11753 12119 11820 12135 11819 12084 11774 12078 11796 12065 11758 12089 11801 Performance monitoring interrupts

IWI: 275 386 9 5 249 12 23 230 19 5 9 88 12 11 13 272 IRQ work interrupts

RTR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 APIC ICR read retries

RES: 3796590 5379566 3837739 3312638 3897533 3422601 4018063 3479823 3183580 2714077 3212495 2795388 3304022 2900512 3440744 2992330 Rescheduling interrupts

CAL: 298922165 316927265 335481455 334354152 336621694 332821868 339466606 340234565 338128749 343757213 338486814 338765905 348015017 348957519 342947851 356437295 Function call interrupts

TLB: 17327878 16711968 17294573 16755631 17707670 17129665 18003681 17384230 18493251 18041659 17750223 17529094 17842667 17327283 18067114 17763520 TLB shootdowns

TRM: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Threshold APIC interrupts

DFR: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Deferred Error APIC interrupts

MCE: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Machine check exceptions

MCP: 884 858 858 857 857 857 858 858 858 858 859 860 860 860 860 860 Machine check pollsNow the interrupts caused by the soundcard are handled on CPU6, while the GPU interrupts are handled in CPU9. So success?

Not quite. Now audio latency is just fine, but Reaper's UI performance is still at a point of being unusable.

Never underestimate the user in the dumbness of their configuration

Interestingly, one thing which I did not take into account is how the audio applications handle their improved rights of allocating real-time system resources. Sometimes they can be configured quite thoroughly.

This is all nice when you know what you're doing, but sometimes you just don't know. And it seems like past me was exactly like that.

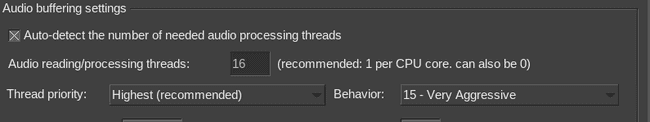

Let's take a look at what options Reaper provides:

We have thread priority set to highest and behavior set to 15 - very aggressive.

When using 15 - very aggressive, CPU gets to 80% usage while playback is on. Changing the behavior back 0-relaxed has dramatic effects: CPU usage is now basically identical to running without realtime privileges.

So why does this happen?

Simply put, it's the audio and UI threads competing for CPU time. The issue here is that when the audio threads get aggressive enough, with pre-emptive scheduling they will kick out any UI thread currently rendering.

As a dumb user my solution here was to remove my user account from the audio group altogether, which caused Reaper to no longer have access to real-time scheduling. With this change the audio processing threads would no longer kick out the UI threads, letting the UI respond to user input more quickly.

Performance profiling

To prove my point further, let's open some Linux profiling tools.

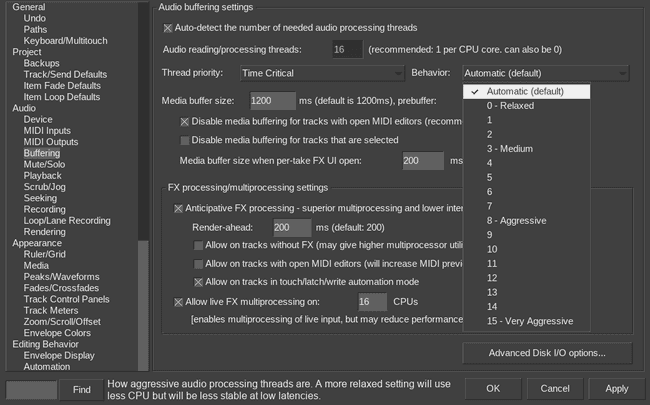

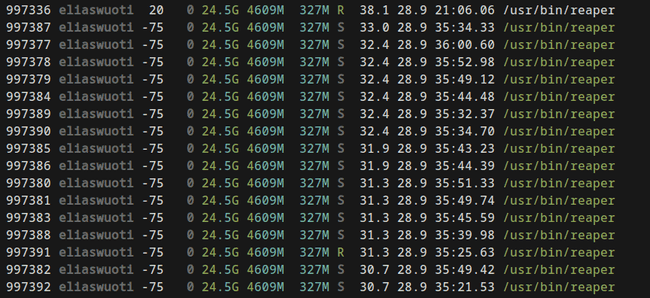

Starting with the simplest, let's open htop. The main thing we're interested in are the Reaper's threads and their CPU usages.

There's multiple threads with negative priorites, which means that they run in realtime. In addition to these the interesting bit is therad 997336, which has a priority of only 20, meaning that it's non-realtime. This the thread which renders the UI. If this thread is starved of CPU time, it will show up as the terrible UI lag I described previously.

Now that we know the threads, we can do some profiling on them. Let's record 30 seconds of profiling data with perf.

sudo perf record -F 99 -a -g -- sleep 30During the recording, I'll toggle between two modes of Reaper's audio buffering settings: 0-relaxed and 15-very aggressive.

The run is started with aggressive settings, after which in the middle I switch to the relaxed setting. In the end, I'll switch back to the aggressive setting one last time.

Visualization

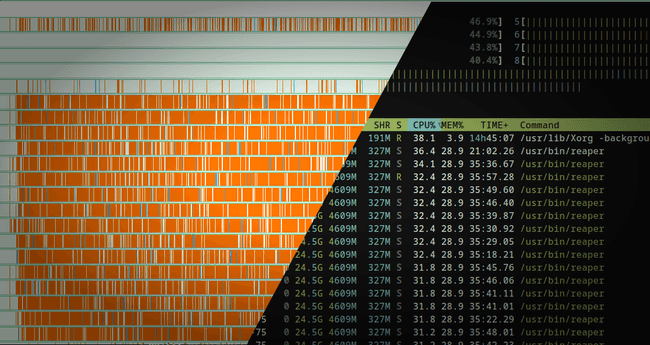

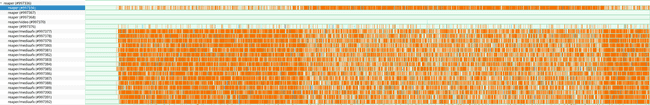

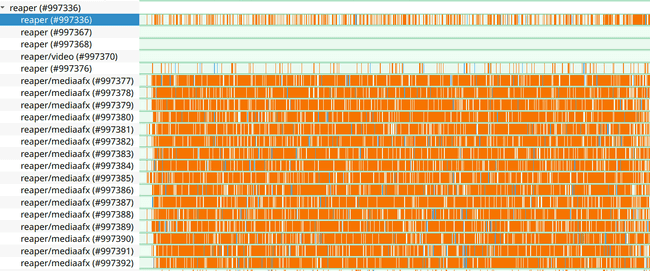

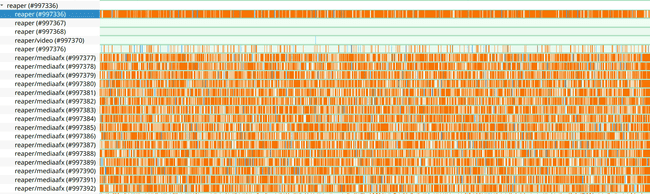

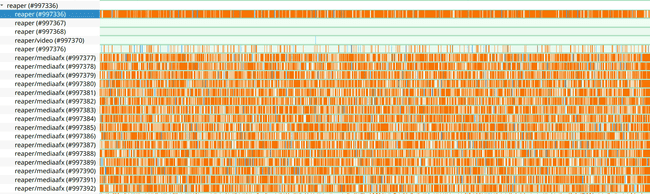

For visualization I'm using hotspot, an excellent GUI visualizer for perf data. After opening the perf.data file in it, let's filter the summary view with reaper and look at the timeline of events:

In the image we have a rough list of Reaper threads and their respective amount of events on the CPU. The x-axis illustrates the time elapsed.

The thread 997336 is the UI thread, while the others below it are the audio processing threads. In the visualization, orange means that the thread in question has had an event on the CPU, while white means that the thread is not off the CPU. So orange parts mean high CPU usage and white parts mean less CPU usage.

Zooming in to the left-hand side, you can see how all much orange all the audio threads have. This is the beginning where I have the most aggressive audio buffering behavior enabled.

But once we move to the middle, the UI thread starts to get more orange, while the audio threads start to have more white gaps in them. This is where I switched the audio buffering behavior to the most relaxed one.

The audio processing threads spend less time hogging the CPU, giving the UI thread sufficient time to render the UI.

In the final section, the thread priorities reverse once again: audio processing threads start hogging resources, leaving the UI thread in the dust.

With this, my initial hunch was correct. I'll set the setting back to Automatic, as that seems to work well enough for now.

Pay attention to how you configure your software

But why did this matter at all? In an audio production application the only thing which matters is the speed at which it can process the audio, right?

Well, depends on your use cases. In terms of performance, you only need your application to handle the current workload, no more, no less.

Sure, you could decrease the size of the audio buffer you're using, and thus get lower latencies. But if a moment later you're only doing arrangement work or mixing, you won't have much of a need for super-low latencies. Instead what you want is a snappy, responsive UI.

So if you don't get any audio buffering issues, there's no need to force the buffering any further. Instead, remember that everything is a compromise and you need to configure your software accordingly.

In most cases you just want a compromise.

PS: To read more on Linux pro audio, check the Arch wiki, for instance.